Program Overview

Mission Summary

- Converge legacy CBSD delivery items with refreshed rack, power, and network layouts.

- Guarantee dual-room resiliency: Sequencer Room (instrument + UPS) and Datacenter Room (compute, storage, data protection).

- Stand up automated transfers to Crown AWS tenant for analytics and partner sharing.

Key Highlights

- 10 Gb production VLAN4, 40 Gb data VLAN7, VLAN2 IPMI, VLAN10 work area.

- Three 500 TB tiers (primary, secondary, clinical isolation) with the data-protection bridge.

- Crown-standard TACACS+, syslog, RBAC enforcement on the management fabric.

Business & Data Flow

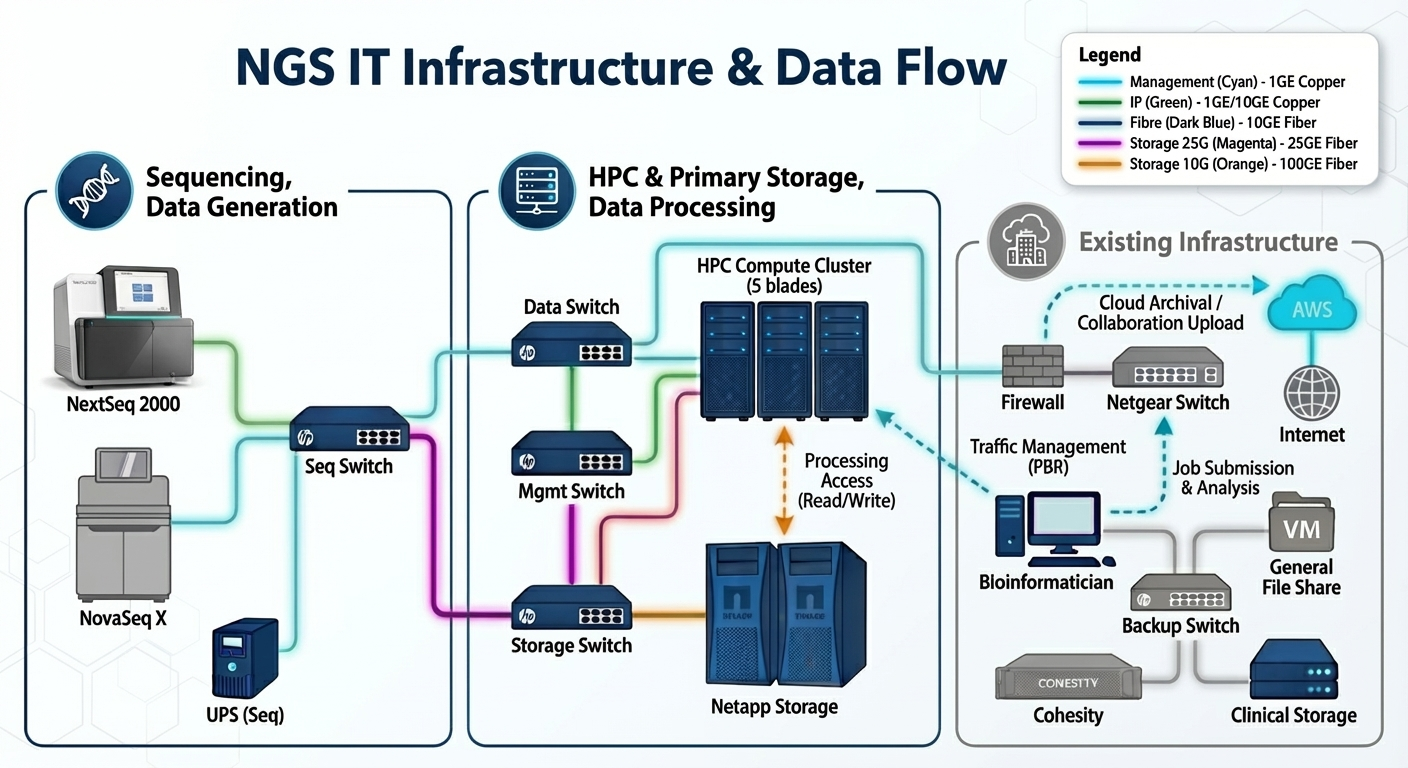

NextSeq 2000 captures production runs today while the NovaSeq X lane is staged; both write via CIFS to the sequencer switch and post health/control data over 1 Gb management links.

10 Gb data and 25 Gb storage fabrics (Catalyst 9200L + Nexus 9300) carry payloads into the HPC rack while IPMI/OOB stays isolated on VLAN2.

Dell PowerEdge R760xs blades execute pipelines under Slurm, writing to the NetApp HA pair and flagging workloads for discovery vs. clinical storage.

Bioinformaticians access results via the legacy Netgear core, Cohesity snapshots replicate to clinical storage + AWS, and job submissions keep the loop closed.

Global Architecture

The architecture view now mirrors the provided “NGS IT Infrastructure & Data Flow” reference: Sequencing and data generation on the left, HPC and primary storage at the center, and existing infrastructure/AWS services on the right, tied together by the same color-coded fabrics.

Design Principles & Delivery Milestones

Physical environment prep, procurement, on-site deployment, and go-live support.

- Sequencer Lab (Room #124): Place NextSeq 2000, lock UPS and power whip, and keep floor space reserved for the later NovaSeq X install; 10 Gb cabling quote underway with install finishing by January.

- Server room: Repurpose document archive into the additional NGS data center pending approval—Lonnie coordinating.

- Cooling: Datacenter stack (5× Dell R760xs @ ~8.2k BTU/h each, 3× NetApp FAS2820 @ ~3.4k, dual Nexus 9300 + dual Catalyst 9200L @ ~5.5k, APC rack UPS overhead ≈2.5k, NextSeq 2000 ≈6.8k) drives ≈62,000 BTU/h. Recommend at least a 7-ton (≈24 kW) expansion coil/CRAH with 20% headroom. Lonnie owns procurement.

- Power: Distribution looks good to go.

- Review and validate hardware spec: Confirm sizing, resiliency, and vendor lead times before release.

- Place order: Kick off procurement so hardware lands by late January.

- Network: Implement on-prem routing, segmentation, and service connectivity for the deployment window.

- Switches / IP subnets / VLAN: Data 25 Gb, IPMI 1 Gb, and sequencer 10 Gb fabrics validated with updated IP plan.

- Cabling: Pull and certify intra-rack and inter-room runs to support compute, storage, and sequencers.

- Internet: Stand up AWS split-network connectivity paths.

- NGS — Crown interconnect: Enable bioinformatic workstation access with the agreed Crown link.

- Server / cluster: Rack-mount hardware, install OS, and build the 5-node cluster.

- Data analysis testing: Execute representative pipelines to verify throughput and validation criteria.

- Storage: Install physical shelves and configure lifecycle rules for backup and retention.

- Data flow automation: Wire up sharing, access, and restriction policies end-to-end.

- Go-live 1 Feb: Cut NextSeq 2000 production traffic and validate NovaSeq X staging paths, then deliver managed support through April.

Network Subnet Plan

| Domain | Function | Subnet / Mask | Gateway | Remark |

|---|---|---|---|---|

| NGS | Machine / Sequencer |

Subnet: 179.15.53.0/24 Mask: 255.255.255.0 Gateway: 179.15.53.254 |

Seq Switch | 172.15.53.253 as the L3 interconnect IP of Storage Switch. |

| HPC | Storage for NGS & HPC |

Subnet: 179.15.52.0/24 Mask: 255.255.255.0 Gateway: 179.15.52.254 |

Storage Switch | Storage shares disks for NGS & HPC via the CIFS protocol. |

| HPC | Data for HPC |

Subnet: 179.15.51.0/24 Mask: 255.255.255.0 Gateway: 179.15.51.254 |

OA Core |

|

| IPMI | Mgmt for NGS & HPC |

Subnet: 179.15.50.0/24 Mask: 255.255.255.0 Gateway: 179.15.50.254 |

OA Core | Out-of-band management of NGS & HPC infrastructure. |

Hardware Stack (Dec 2025 quotation)

Line items are transcribed from San Diego HPC&NGS-20251219.xlsx. Pricing is intentionally removed so this page focuses on scope, capacity, and deployment readiness.

| Item | Brand / Model | Qty | Function | Notes |

|---|---|---|---|---|

| Server & Storage | ||||

| Analysis Servers | Dell PowerEdge R760xs | 5 | Compute blades | Dual Intel Gold 6526Y, 8×64 GB RDIMM, 3×1.92 TB SSD (RAID5), Broadcom 57414 10/25 Gb, iDRAC Enterprise. |

| NGS Storage (HA) | NetApp FAS2820 (25 Gb SFP28) | 2 | Primary & secondary landing tiers | Dual controller, 48×22 TB, SnapMirror HA, 3-year 4‑hour support. |

| NGS Storage (Option) | Dell PowerVault ME5212 + ME412 | 1 | Alternate HA landing tier | Dell ME5212 storage with 25 Gb FC Type‑B dual controllers, 2×2 SFP+ FC25 optics, 28×24 TB 12 Gb SAS 7.2K drives, 5U rack kit, dual 2200 W PSU, ProSupport Plus 4‑hour/36‑month. |

| NAS / Clinical Storage | NetApp FAS2820 (10 Gb SFP+) | 1 | Isolated clinical / NAS tier | Dual controller, 48×22 TB, 10 Gb data path for segregated workloads. |

| NAS Storage (Option) | Dell PowerVault ME5212 | 1 | Alternate NAS tier | Dell ME5212 storage with 25 Gb FC Type‑B dual controllers, 2×2 SFP+ FC25 optics, 28×24 TB 12 Gb SAS 7.2K drives, 5U rack kit, dual 2200 W PSU, ProSupport Plus 4‑hour/36‑month. Notes: confirm SAN switch, add 25 Gb NIC + 2×SFP to any server needing this array. |

| Precision Consoles | Dell Precision 3680 Tower | 2 | Bioinformatics workstations | Intel i7‑14700, 64 GB RAM, 2×2 TB SSD RAID1, Windows 11 Pro, 3‑year onsite support. |

| OS & Platform Stack | RHEL 9 Subscription | 5 | Cluster OS & middleware | Includes Slurm 23, GPFS entitlement, data-protection tooling, and AWS CLI/Snowball integration. |

| Network & Optics | ||||

| Sequencer Fabric Switch | Cisco N9K-C93108TC-FX3 | 1 | Sequencer aggregation | 48×100M/1/10G-T plus 6×40/100 G QSFP28; dual PSUs and fans. |

| Storage Fabric Switch | Cisco N9K-C93180YC-FX3 | 1 | 25 Gb storage/data fabric | 48×1/10/25 Gb SFP28 plus 6×40/100 G QSFP28; dual PSUs and fans. |

| Data Access Switch | Cisco C9200L-48T-4X-E | 1 | Production VLAN4 | 48×1 Gb, 4×10 Gb uplinks, Network Essentials license, DNAC subscription. |

| Management / IPMI Switch | Cisco C9200L-48T-4X-E | 1 | VLAN2 + work access | 48×1 Gb, 4×10 Gb uplinks, dual PSU. |

| QSFP Active Optical Cable | Cisco QSFP-100G-AOC5M | 2 | Fabric interconnect | 5 m 100 Gb AOC connecting the Nexus pair. |

| 25 Gb SFP28 Optics | Cisco SFP-25G-SR-S | 18 | Server & storage uplinks | Fan-out to 5 servers (2 each) plus two NetApp HA pairs (4 each). |

| 10 Gb SFP+ Optics | Cisco MA-SFP-10GB-SR | 4 | Clinical NAS connectivity | Merges clinical NetApp shelves into legacy fabric. |

| OM4 Fiber (2 m) | LC-LC, MM | 8 | Storage patching | Short links between NetApp controllers and Nexus. |

| OM4 Fiber (5 m) | LC-LC, MM | 10 | Server uplinks | 2×5 runs for compute racks. |

| OM4 Fiber (10 m) | LC-LC, MM | 4 | NAS reach | NAS shelves to storage switch. |

| Cat6 Copper (2 m) | Factory bundle | 10 | Server management | IPMI and console jumpers (two bundles of five). |

| Cat6 Copper (5 m) | Factory bundle | 6 | Storage + Nexus | 2×2 storage runs plus 2× management uplinks. |

| Cat6 Copper (10 m) | Factory bundle | 4 | NAS + Catalyst | NAS redundancy and Catalyst uplinks. |

| Cat6A Copper (10 m) | Factory bundle | 5 | Sequencer data | High-bandwidth copper paths from sequencer room. |

| Accessory Materials | Cable trays / labels | 1 | Structured cabling kit | Includes ladder tray hardware, tie-downs, and labeling stock. |

| Facility & Power | ||||

| Dedicated Cold Aisle / HVAC | Facility scope | 1 | Cooling | Load recompute: ≈54,000 BTU/h for NextSeq 2000 + planned NovaSeq X + compute/storage; spec ≥5-ton coil. |

| 42U Racks + Dual PDUs | Standard cabinets | 2 | Rack footprint | Includes blanking panels, monitoring strips, and A/B PDUs. |

| UPS · Datacenter | APC Smart-UPS Online SRT10KXLI | 2 | Rack-level UPS | One 10 kVA online UPS per rack delivering dedicated power paths with N+1 coverage across the pair. |

| UPS · Sequencer / Lab | APC Smart-UPS Online SRT3000XLA | 1 | Instrument UPS | 3 kVA online UPS dedicated to the single NextSeq 2000 with circuits ready for future NovaSeq X. |

| Structured Cabling | Network link works | 1 | Fiber & copper plant | Part of facility build; ties sequencer room to datacenter. |

| Services & Support | ||||

| Remote Implementation Service | Dongke Service | 1 | Architecture & integration | WAN/HPC design, NetApp HA configuration, validation testing. |

| On-site Service | Dongke Deployment Block | 1 | Hands-on install | Rack/stack, OS installs, cutover support. |

| Annual Maintenance | Dongke Service | 1 | Run & support | Remote monitoring, updates, and escalation coverage. |

Execution Anchors

Each delivery stage below maps directly to the Physical Environment → Hardware & Procurement → On-site Deployment → Go-live & Post-launch timeline bands.

Ready Room #124 for the NextSeq 2000, keep NovaSeq X footprint reserved, finish 10 Gb cabling quotes, confirm UPS/power paths, and release the ≥5-ton cooling upgrade.

Review the Dec 2025 quotation, validate specs, lock BTU + power inputs, and place orders for Dell, NetApp, Cisco, cabling, and services.

Rack the two 42U cabinets, install the five-node cluster plus NetApp shelves, terminate optics/copper, and stand up network/services through data-flow testing.

Cut over the NextSeq 2000 data path on 1 Feb, pilot NovaSeq X prep flows, monitor SLAs, and deliver ongoing maintenance + support through April.